3 Product Management learnings from building a simple AI-powered App

01-23-2025

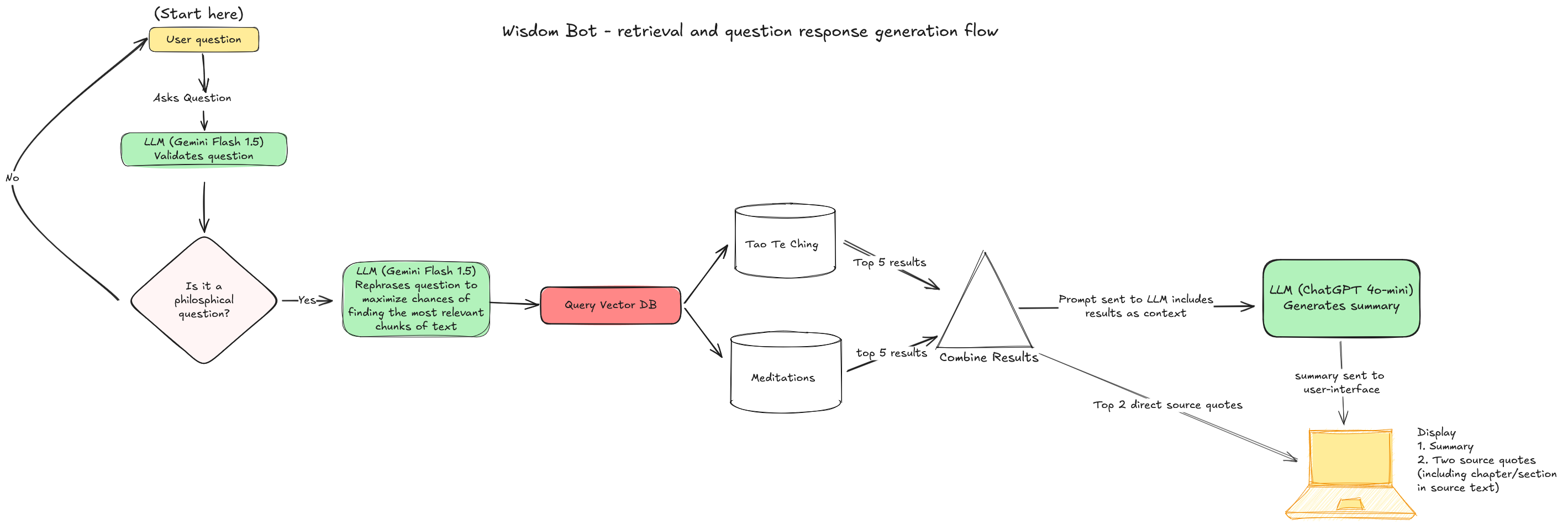

Over the holidays, I built a simple AI-powered web app to gain hands-on experience with LLM APIs and Retrieval Augmented Generation (RAG) techniques. The user experience was simple: provide relevant wisdom to user's questions based drawing solely from two philosophical texts - Meditations by Marcus Aurelius and the Tao Te Ching by Laozi.

(note: unfortunately I had to take the link down temporarily as some of the LLM model versions used are now deprecated)

I'll focus on general learnings about working with LLMs here, leaving RAG and document ingestion/retrieval processes for another discussion.

1. Prompt Engineering was more important and time intensive that I expected

LLM-powered applications and agents rely heavily on behind-the-scenes prompting. RAG applications enhance LLM prompts with relevant text context from a knowledge corpus (for example, your company's FAQs). In my application's flow, three different LLM prompts occurred between user query and final output.

It turns out that crafting, testing and refining the three prompts I used in the flow was one of the most time-consuming aspects of the project.

👉Takeaway: Crafting prompts is an iterative test-and-refine process that should be tested against diverse user inputs and scenarios.

2. Using an LLM to rephrase user queries led to more consistent results

As I tested my app with family and friends, I observed that the queries were often too broad, vague, or keyword-focused. This makes sense because: 1) Google has trained us to often search using keywords, and 2) chat interfaces encourage conversational shorthand where we assume the recipient understands un-spoken context.

My use case needed queries to be somewhat philosophical in nature to yield relevant results from the provided texts. Luckily, LLMs are really good at summarizing and rewriting, so I added a step to do just that. A question like "How can I deal with a difficult co-worker?" (which yielded mediocre results) became "How can I maintain my inner peace and composure when interacting with challenging individuals?"

👉Takeaway: Treat LLM requests like a really smart intern on their first day. Clear and precise questions with ample context yield better results. For some use cases, leveraging an LLM to clarify user requests before submitting a separate request to complete the task might yield better results.

3. LLMs are inherently inconsistent

Remember: LLM outputs are fundamentally probabilistic. Ask the same question twice and you may get different answers. This isn't always a bad thing - it's what allows for creative responses - which may be what you want when you want help writing or brainstorming ideas.

The APIs provide some control over this through the "temperature" setting (usually between 0 and 2). For my use case, keeping the temperature low (around 0.1) provided safer, more predictable outputs. However, even at the lowest settings, 100% consistency isn’t guaranteed, especially if inputs vary.

👉Takeaway: Once again, iterating on prompts and testing is key - particularly if your agent flow requires a high degree of predictability or is making decisions.

Would love to hear any experiences you have with building #AI applications and what role Product will/should play in defining and refining these components - is this the role of technology, product or both?